The numbers tell a sobering story. 56% of college students admit to using AI tools on assignments or exams, and the rate of AI-related academic misconduct has tripled in just two years. We’re not talking about a few bad apples anymore—this is reshaping how students approach learning, and frankly, how teachers need to approach teaching.

Here’s the thing, though: AI isn’t going anywhere. The real question isn’t whether students will use these tools, but how we guide them to use them responsibly while protecting the integrity of education. Let’s dive into the five most common ways students are gaming the system with AI, and more importantly, what you can actually do about it.

- 5 Ways Students Cheat Using AI and Methods to Prevent It

- AI-Written Essays and Homework: The Instant Paper Factory

- AI for Problem-Solving: The Homework Helper on Steroids

- AI Paraphrasing: Plagiarism’s New Disguise

- AI During Online Tests: The Digital Cheating Epidemic

- AI as a Full “Ghostwriter”: When Projects Write Themselves

- The Technology Fighting Back: OnlineExamMaker’s AI Proctoring Solution

- Final Thoughts: Adapting to a New Reality

5 Ways Students Cheat Using AI and Methods to Prevent It

1. AI-Written Essays and Homework: The Instant Paper Factory

Let’s be honest—the allure is undeniable. A student types “Write me a 1,500-word essay on climate change’s impact on coastal ecosystems” into ChatGPT, and thirty seconds later, they’ve got something that looks remarkably like a B+ paper. Maybe they change a few words here, add a personal anecdote there, and voilà—homework done.

Research shows that 89% of students who use AI tools deploy them for homework assignments, with essays being the prime target. The pressure cooker of modern academia—competing deadlines, part-time jobs, extracurriculars—makes that instant gratification almost irresistible. Add language barriers for international students or the paralyzing fear of a bad grade, and you’ve got a perfect storm for AI-assisted shortcuts.

Why It’s Tempting

Time pressure doesn’t explain everything, though. Some students genuinely struggle with academic writing—constructing arguments, organizing thoughts, finding their voice. AI offers what feels like a lifeline. Others simply don’t see the harm; after all, they reason, doesn’t everyone use calculators for math?

Prevention Strategies That Actually Work

The knee-jerk reaction—banning AI or threatening harsh penalties—rarely works. Students find workarounds, and you’re back to square one. Instead, try these approaches:

Design assignments AI can’t fake. Generic prompts like “Discuss the themes in Hamlet” are AI candy. Instead, ask students to connect course material to their personal experiences, local events, or observations from class discussions. “How does Hamlet’s indecision compare to a difficult choice you’ve faced?” That’s harder to outsource to a chatbot.

Make the process visible. Require students to submit their work in stages—outlines, rough drafts with your comments, revision notes. Schedule brief one-on-one conversations where students explain their thesis and main arguments. If they can’t articulate their own ideas, that’s your red flag. Think of it as showing your math work, but for writing.

Embrace AI literacy. Here’s a radical thought: teach students to use AI as a legitimate tool. Have them generate an AI essay, then critique it together as a class. What’s missing? Where’s the depth lacking? What sources did it invent? This turns AI from enemy to teaching moment.

2. AI for Problem-Solving: The Homework Helper on Steroids

Math, physics, programming, statistics—any field with step-by-step solutions is now vulnerable. Students photograph a calculus problem, upload it to an AI tool, and receive not just the answer but a complete breakdown of how to solve it. Sounds educational, right?

The catch? Students often copy solutions without understanding the underlying concepts. They’re borrowing someone else’s brain (well, an AI’s silicon brain) rather than developing their own problem-solving muscles. Research indicates that between 39% and 48% of students use AI tools for problem-solving tasks, particularly in STEM fields.

The Deeper Problem

When exam time rolls around—particularly in-person exams without device access—these students hit a wall. They’ve never actually learned the problem-solving process. They memorized patterns from AI solutions without grasping the fundamental principles. It’s like learning to drive by watching videos; you might understand the theory, but good luck on your road test.

Prevention Strategies That Build Real Skills

Use context-specific problems. Generic textbook questions? Easy AI pickings. Instead, create problems using local data, school statistics, or community information. “Calculate the projectile motion of our school’s basketball free throw using data you collect from the gym.” AI can explain projectile motion, but it can’t fabricate your school’s specific measurements.

Incorporate frequent low-stakes assessments. Pop quizzes get a bad rap, but here’s their secret weapon: they reveal who’s actually learning versus who’s outsourcing to AI. Keep them short, make them worth little individually, and focus on applying concepts rather than memorizing formulas. If students can’t solve basic problems without their devices, you’ve identified a learning gap.

Require work-showing that reveals thinking. Don’t just grade the final answer. Demand detailed explanations of each step, including why they chose particular methods. Better yet, include “explain your reasoning” questions. AI can calculate; it’s less adept at mimicking authentic student voice explaining their thought process.

3. AI Paraphrasing: Plagiarism’s New Disguise

This one’s sneaky. A student finds a perfect article or uses a friend’s old paper, feeds it into an AI paraphrasing tool, and out comes “original” text that dodges plagiarism checkers. The ideas? Lifted. The words? Technically different. The ethical violation? Absolutely real, but harder to prove.

What troubles educators most is that many students don’t even recognize this as cheating. They genuinely believe that changing the wording transforms plagiarism into acceptable practice. It’s a loophole in their minds, a gray area they’ve convinced themselves is white.

The Ethics Disconnect

Part of the problem lies in inconsistent or unclear academic policies. About 60% of students report that their schools haven’t specified how to use AI tools ethically or responsibly. When the rules are fuzzy, students fill in the blanks with whatever serves their immediate needs. Can’t really blame them for exploiting ambiguity we created.

Prevention Through Clarity and Practice

Establish crystal-clear citation policies. Don’t assume students know what constitutes plagiarism in the AI era. Create explicit guidelines: “If you used AI to paraphrase any source, cite both the original source AND note that AI assisted with paraphrasing.” Make these rules accessible, discuss them regularly, and include them in assignment instructions.

Build skills through scaffolded practice. Instead of assigning one massive research paper, break it into components: find sources, write annotations summarizing each in your own words, draft sections with in-text citations, synthesize into final paper. This step-by-step approach makes AI shortcuts less appealing and develops genuine research skills.

Focus on interpretation over summary. Assign tasks requiring student voice and critical analysis rather than information regurgitation. “Summarize this article” invites AI paraphrasing. “Critique this article’s methodology and propose improvements” demands original thinking AI can’t easily replicate.

4. AI During Online Tests: The Digital Cheating Epidemic

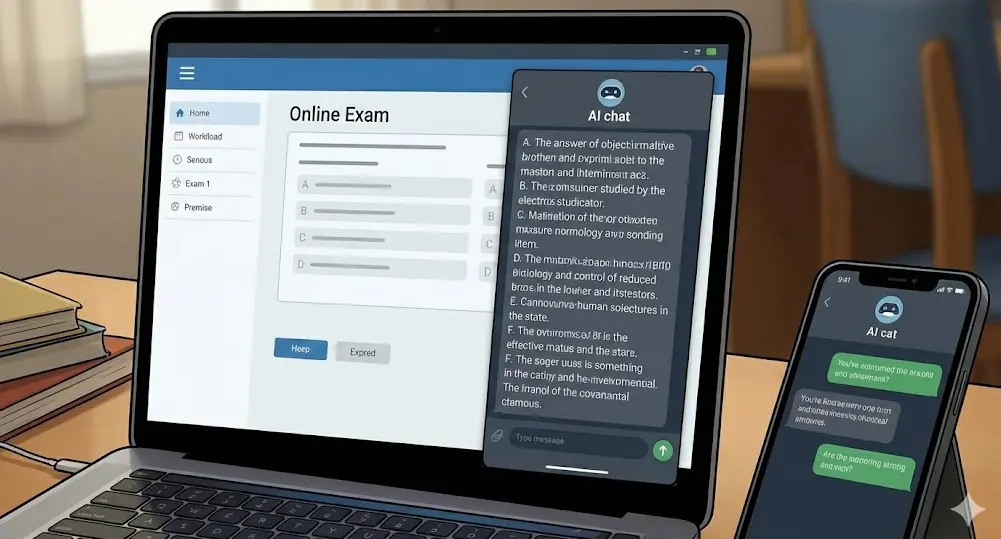

Unproctored online exams have become a free-for-all. Students keep AI chatbots open in another window, screenshot questions, or simply type queries into search engines augmented by AI. Multiple devices make it even easier—laptop for the exam, phone for the AI consultation.

The statistics are stark: AI-related academic misconduct now represents 60-64% of all cheating cases in higher education institutions globally. That’s a seismic shift from traditional plagiarism patterns, and it happened in the span of just two years.

The Arms Race

Here’s the uncomfortable truth: detection is difficult. AI detection tools exist, but they’re far from perfect, with false positive rates that can unfairly penalize honest students. Meanwhile, students share workarounds and techniques faster than educators can adapt. It’s an arms race nobody wins.

Prevention Through Smart Assessment Design

Implement frequent, lower-stakes testing. One high-stakes final exam creates enormous pressure to cheat. Ten smaller quizzes worth less individually? Students feel less desperate, have less time during each assessment to consult AI, and you get better data on their ongoing learning. Bonus: frequent testing actually improves retention through the testing effect.

Blend assessment methods. Combine online tests with other evaluation forms: short oral exams via video call, project presentations, practical demonstrations. If test performance seems suspiciously high but presentation skills lag behind, that discrepancy tells you something important.

Use advanced proctoring technology. This brings us to specialized solutions designed for the AI age. Rather than playing detective after the fact, prevention during the assessment is more effective—and fair.

5. AI as a Full “Ghostwriter”: When Projects Write Themselves

The most comprehensive form of AI cheating: students use chatbots to generate entire project concepts, outlines, scripts, presentation slides, and speaker notes. They might customize a few details, insert their name, and present work they fundamentally didn’t create.

The polish can be impressive—perhaps suspiciously so. A student who struggles in class discussions suddenly delivers a sophisticated analysis. Red flags wave, but proving it? That’s another challenge entirely.

The Hidden Consequences

Beyond the obvious academic dishonesty, these students rob themselves of learning opportunities. Projects teach planning, research, synthesis, communication—skills crucial for professional success. When AI does the heavy lifting, students graduate with credentials but without capabilities. The job market won’t be as forgiving as the classroom.

Prevention Through Authentic Assessment

Require primary research and data collection. Design projects demanding interviews with community members, fieldwork observations, or original surveys. AI can help analyze data, but it can’t interview your neighbor or document local phenomena. Authentic research creates authentic learning.

Build in reflection and metacognition. Ask students to document their process: What AI tools did you use and for what purpose? What suggestions did you reject and why? How did your thinking evolve? This transparency serves dual purposes—it teaches responsible AI use and reveals who’s actually engaging with the material.

Present-and-defend format. After project submission, schedule brief sessions where students explain their work and answer questions. Someone who truly created their project can discuss methodology, defend choices, and elaborate on findings. Someone who copied from AI? They’ll stumble when pushed beyond surface-level understanding.

The Technology Fighting Back: OnlineExamMaker’s AI Proctoring Solution

While pedagogical strategies form your first line of defense, technology can reinforce those efforts—particularly for online assessments. OnlineExamMaker offers a comprehensive AI-powered proctoring system specifically designed to maintain exam integrity in the digital age.

Create Your Next Quiz/Exam with OnlineExamMaker

How OnlineExamMaker Protects Academic Integrity

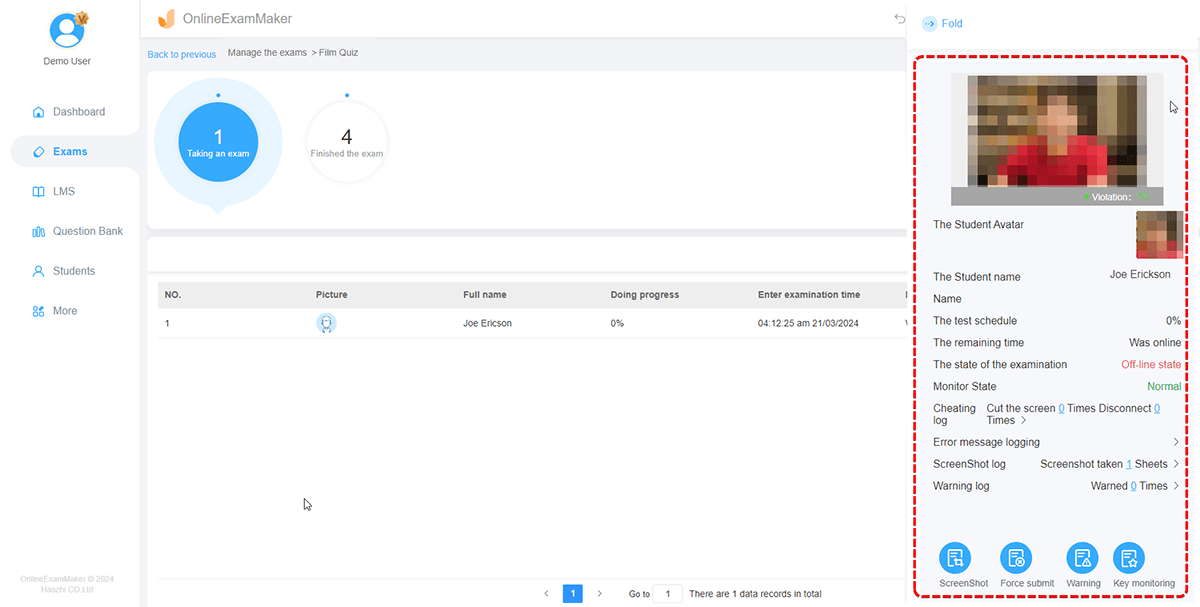

Facial Recognition Technology: The system uses AI-powered face recognition to verify test-taker identity. Before starting an exam, students upload a profile photo. The system then captures images via webcam throughout the test, comparing them to the stored photo to prevent proxy test-taking.

360-Degree Webcam Monitoring: Real-time video monitoring captures the entire test-taking process. The AI analyzes behavior patterns, detecting suspicious activities like:

- Bowing head or turning away (potentially reading hidden materials)

- Multiple faces appearing on screen (unauthorized assistance)

- No face detected for extended periods (student has left the testing area)

- Audio detection for verbal communication or using voice-activated AI

- Face matching failures (someone other than the registered student is taking the exam)

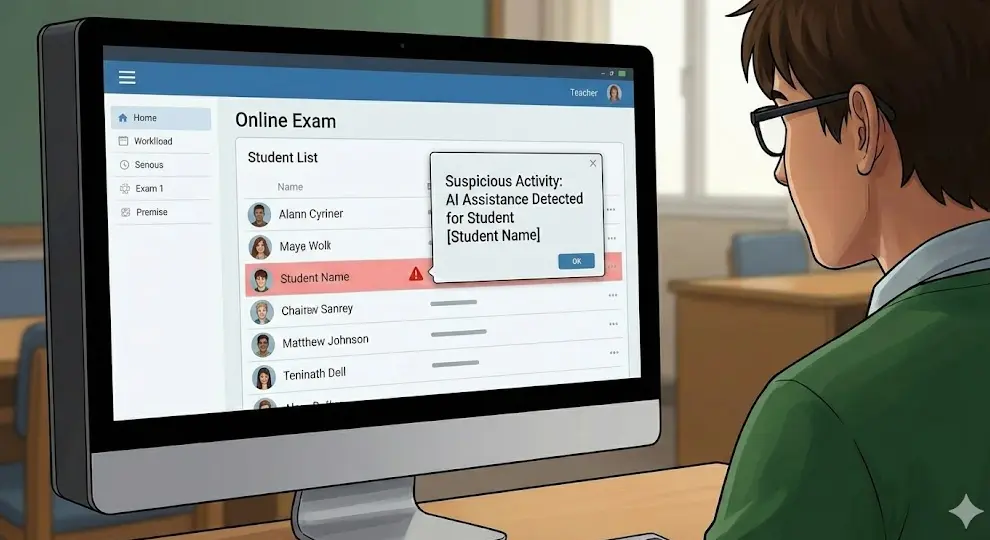

Intelligent Alert System: Administrators set thresholds for suspicious behaviors. If a student exceeds these limits—say, looking away more than five times—the system can automatically submit their exam or alert proctors for intervention.

Lockdown Browser Features: The platform enforces full-screen mode, preventing students from opening additional windows, tabs, or applications. It can detect screen-switching attempts and limit how many times students can leave the exam interface.

Randomization Tools: To prevent answer-sharing, OnlineExamMaker offers question randomization (drawing from question banks), order randomization (shuffling question sequence), and option randomization (rearranging multiple-choice answers). Each student sees a unique exam configuration.

Real-Time Proctoring Dashboard: Administrators can monitor all active test-takers simultaneously through a centralized console, viewing live webcam feeds and receiving instant alerts for suspicious activity. After exams, detailed logs and captured images provide evidence for review.

Implementation Best Practices

Technology alone isn’t a silver bullet. For maximum effectiveness, OnlineExamMaker recommends allowing students to log in 30 minutes early to test their webcams, adjust lighting, and complete face verification before the exam begins. This prevents technical difficulties from causing legitimate students to fail identity checks.

The system also supports various assessment strategies educators have found effective: time limits per question (reducing opportunity to consult AI), automatic submission after extended offline periods, and restrictions preventing multiple simultaneous logins from the same account.

Most importantly, clear communication with students about proctoring measures builds trust. When students understand what’s being monitored and why, they’re more likely to accept these safeguards as fair rather than invasive. Transparency matters.

Final Thoughts: Adapting to a New Reality

Let’s zoom out for a moment. We’re witnessing a fundamental shift in education, similar to when calculators became ubiquitous or when the internet made information universally accessible. Each time, educators adapted—not by banning the technology, but by changing what and how we teach.

The same applies to AI. Research shows that 68% of instructors believe generative AI will negatively impact academic integrity, but that perspective might be missing the bigger picture. AI isn’t making students cheat; it’s exposing weaknesses in how we assess learning.

If an AI can ace your test or assignment, maybe that test or assignment was measuring memorization rather than understanding, regurgitation rather than critical thinking. The solution isn’t to wage war against AI—a war we’ll inevitably lose—but to redesign assessment around skills AI can’t replicate: creativity, ethical reasoning, original research, personal insight, real-world application.

Here’s the paradox: students need to learn to use AI responsibly because their future careers will likely require it. The World Economic Forum lists AI literacy among critical workforce skills. So we’re not preparing them for an AI-free world; we’re preparing them for an AI-integrated world where they need judgment about when and how to use these tools.

That means clear policies, yes. Robust proctoring for high-stakes exams, absolutely. But more fundamentally, it means rethinking what we value in education. Process over product. Thinking over answers. Growth over grades.

The students arriving in your classroom today will graduate into a world transformed by AI. Your job isn’t to shield them from that reality but to equip them to navigate it with integrity, critical thinking, and genuine competence. The cheating problem is real, but it’s also an opportunity—an invitation to build something better than what we had before.

After all, education has always been about more than just preventing cheating. It’s about inspiring learning. And in the age of AI, that mission matters more than ever.