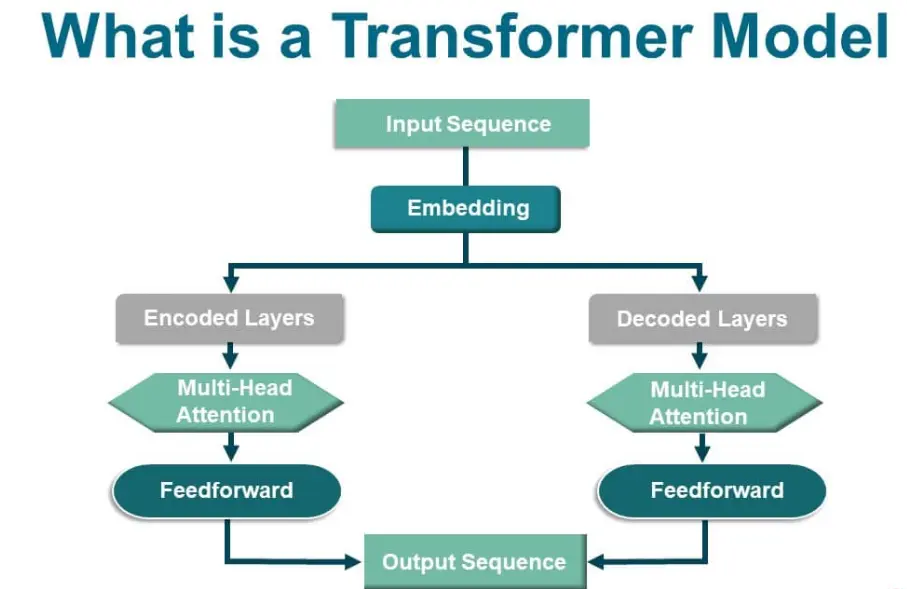

Transformer models are a revolutionary architecture in artificial intelligence, introduced in 2017 by researchers at Google. At their core, they rely on a mechanism called self-attention, which allows the model to weigh the importance of different words in a sequence, enabling it to process data in parallel rather than sequentially. This design eliminates the need for recurrent or convolutional layers found in earlier models, making transformers faster and more efficient for handling large datasets.

Key components include:

Encoders: These process the input data, capturing contextual relationships through multiple layers of self-attention and feed-forward networks.

Decoders: Used in tasks like translation, they generate output sequences while attending to both the input and previously generated tokens.

Transformers have transformed natural language processing (NLP), powering applications such as machine translation (e.g., Google Translate), text summarization, sentiment analysis, and even image and audio processing through variants like Vision Transformers (ViT). Their scalability has led to massive models like GPT and BERT, which excel in understanding and generating human-like text, driving advancements in AI across industries.

Table of contents

- Part 1: OnlineExamMaker AI quiz generator – Save time and efforts

- Part 2: 20 transformer models quiz questions & answers

- Part 3: Save time and energy: generate quiz questions with AI technology

Part 1: OnlineExamMaker AI quiz generator – Save time and efforts

What’s the best way to create a transformer models quiz online? OnlineExamMaker is the best AI quiz making software for you. No coding, and no design skills required. If you don’t have the time to create your online quiz from scratch, you are able to use OnlineExamMaker AI Question Generator to create question automatically, then add them into your online assessment. What is more, the platform leverages AI proctoring and AI grading features to streamline the process while ensuring exam integrity.

Key features of OnlineExamMaker:

● Combines AI webcam monitoring to capture cheating activities during online exam.

● Allow the quiz taker to answer by uploading video or a Word document, adding an image, and recording an audio file.

● Automatically scores multiple-choice, true/false, and even open-ended/audio responses using AI, reducing manual work.

● OnlineExamMaker API offers private access for developers to extract your exam data back into your system automatically.

Automatically generate questions using AI

Part 2: 20 transformer models quiz questions & answers

or

Question 1:

What is the primary function of the self-attention mechanism in a Transformer model?

A) To process sequential data one element at a time

B) To weigh the importance of different words in the input sequence

C) To apply convolutional filters to the input

D) To generate positional encodings

Answer: B

Explanation: The self-attention mechanism allows the model to focus on different parts of the input sequence simultaneously, assigning weights to determine the relevance of each element to others, which improves parallel processing and context understanding.

Question 2:

In the Transformer architecture, what role does the encoder play?

A) It generates the output sequence

B) It processes the input sequence and creates representations

C) It handles only the decoder’s attention

D) It applies feed-forward networks exclusively

Answer: B

Explanation: The encoder takes the input sequence, processes it through self-attention and feed-forward layers, and produces contextualized representations that the decoder uses for tasks like translation.

Question 3:

Which component of the Transformer model helps it understand the order of words, since it lacks recurrence?

A) Multi-head attention

B) Positional encoding

C) Feed-forward networks

D) Layer normalization

Answer: B

Explanation: Positional encoding adds information about the position of each token in the sequence to the input embeddings, compensating for the model’s inability to process sequences sequentially like RNNs.

Question 4:

What is the purpose of multi-head attention in Transformers?

A) To focus on a single attention head for efficiency

B) To attend to different parts of the sequence simultaneously from multiple perspectives

C) To reduce the number of parameters in the model

D) To replace the encoder entirely

Answer: B

Explanation: Multi-head attention uses multiple attention heads in parallel, each capturing different relationships in the data, which enriches the model’s ability to capture complex dependencies.

Question 5:

In a standard Transformer, how are the queries, keys, and values generated?

A) From the output of the decoder only

B) From linear projections of the input embeddings

C) Randomly during training

D) Through convolutional layers

Answer: B

Explanation: Queries, keys, and values are derived from linear transformations of the input sequence, enabling the attention mechanism to compute relationships between elements efficiently.

Question 6:

What advantage does the Transformer have over recurrent neural networks (RNNs)?

A) It processes sequences sequentially for better memory

B) It handles long-range dependencies more effectively through parallelization

C) It requires less computational resources

D) It is designed only for image data

Answer: B

Explanation: Transformers process the entire sequence in parallel via self-attention, allowing them to capture long-range dependencies without the vanishing gradient problems common in RNNs.

Question 7:

Which layer in the Transformer applies a non-linear transformation to the output of the attention mechanism?

A) Positional encoding layer

B) Feed-forward network

C) Multi-head attention layer

D) Normalization layer

Answer: B

Explanation: The feed-forward network, applied to each position separately, consists of fully connected layers that introduce non-linearity and further process the attention outputs.

Question 8:

What is the role of residual connections in the Transformer architecture?

A) To skip layers entirely during training

B) To add the input of a sub-layer to its output, aiding gradient flow

C) To connect the encoder directly to the decoder

D) To normalize the attention weights

Answer: B

Explanation: Residual connections help mitigate the vanishing gradient problem by adding the sub-layer’s input to its output, facilitating deeper networks and better training stability.

Question 9:

In the decoder of a Transformer, what additional attention mechanism is used?

A) Only self-attention

B) Encoder-decoder attention

C) Positional attention

D) Feed-forward attention

Answer: B

Explanation: The decoder uses encoder-decoder attention to focus on the encoder’s output while generating the sequence, allowing it to incorporate context from the input.

Question 10:

What type of data is most commonly associated with Transformer models?

A) Images only

B) Sequential data like text or time series

C) Audio signals exclusively

D) Graph structures

Answer: B

Explanation: Transformers were originally designed for sequence-to-sequence tasks in natural language processing, making them ideal for text data, though they have been adapted to other domains.

Question 11:

How does the Transformer handle variable-length input sequences?

A) By padding all sequences to a fixed length

B) Through dynamic attention that adapts to sequence length

C) By truncating sequences during processing

D) Using recurrent loops

Answer: B

Explanation: The self-attention mechanism dynamically attends to the actual elements in the sequence, and padding with masks ensures that variable lengths are handled without affecting performance.

Question 12:

What is the output of the Transformer’s encoder?

A) A single vector representation

B) A sequence of vectors for each input token

C) The final decoded output

D) Positional encodings only

Answer: B

Explanation: The encoder outputs a sequence of hidden states, one for each input token, which the decoder then uses as context for generating the output sequence.

Question 13:

Which variant of the Transformer model is primarily used for language modeling tasks like text generation?

A) BERT

B) GPT

C) T5

D) All of the above

Answer: B

Explanation: GPT (Generative Pre-trained Transformer) is designed for autoregressive language modeling, where it predicts the next token based on previous ones, making it suitable for generation tasks.

Question 14:

In Transformers, what does layer normalization do?

A) Normalize the input data across the entire sequence

B) Normalize the activations of each layer for each token individually

C) Replace positional encodings

D) Standardize attention weights

Answer: B

Explanation: Layer normalization stabilizes and speeds up training by normalizing the inputs to the activation functions within each layer, applied per token.

Question 15:

What is the computational complexity of the self-attention mechanism in Transformers?

A) O(1) for constant time

B) O(n^2 * d) where n is sequence length and d is model dimension

C) O(n * d) for linear scaling

D) O(n^3) for cubic growth

Answer: B

Explanation: Self-attention’s complexity arises from computing attention scores between all pairs of tokens, resulting in quadratic growth with sequence length, which can be a bottleneck for very long sequences.

Question 16:

How are masks used in the Transformer decoder?

A) To prevent the model from attending to future tokens

B) To ignore positional encodings

C) To reduce the number of attention heads

D) To filter out stop words

Answer: A

Explanation: The decoder uses a look-ahead mask in self-attention to ensure that predictions for a token do not depend on subsequent tokens, maintaining autoregressive properties.

Question 17:

Which technique is used in Transformers to combine multiple attention heads?

A) Averaging their outputs

B) Concatenating and linearly projecting them

C) Subtracting them for differences

D) Multiplying their weights

Answer: B

Explanation: The outputs of multiple attention heads are concatenated and then passed through a linear layer, allowing the model to integrate diverse attention patterns effectively.

Question 18:

What is a key benefit of using Transformers in machine translation?

A) It requires less training data

B) It captures global dependencies across the entire sentence at once

C) It operates sequentially like RNNs

D) It eliminates the need for attention mechanisms

Answer: B

Explanation: Transformers’ parallel processing and self-attention enable them to consider the full context of a sentence simultaneously, leading to better translation accuracy for long-range relationships.

Question 19:

In the original Transformer paper, how many layers are typically used in the encoder and decoder?

A) 1 layer each

B) 6 layers each

C) 12 layers each

D) Variable, depending on the task

Answer: B

Explanation: The original Transformer architecture used 6 layers in both the encoder and decoder, as described in the paper by Vaswani et al., though modern variants often adjust this number.

Question 20:

What makes the Transformer model scalable for large datasets?

A) Its use of recurrent connections

B) Parallelization of computations and efficient attention mechanisms

C) Limited parameter size

D) Dependency on external memory

Answer: B

Explanation: The ability to process sequences in parallel and the efficiency of self-attention make Transformers highly scalable, allowing them to handle large-scale training on modern hardware.

or

Part 3: Save time and energy: generate quiz questions with AI technology

Automatically generate questions using AI