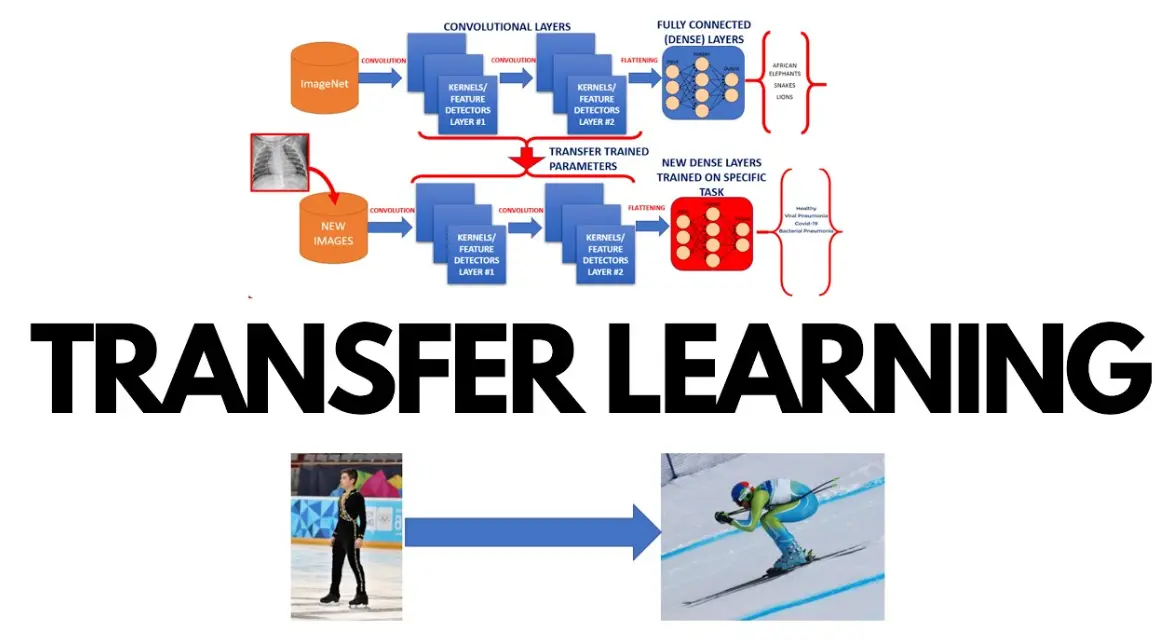

Transfer learning is a machine learning technique where a pre-trained model, developed for one task, is repurposed and fine-tuned for a different but related task. This approach leverages knowledge gained from the original task to improve efficiency and performance on the new one, particularly when data for the new task is limited.

At its core, transfer learning involves three main stages:

Pre-training: A model is trained on a large, general dataset (e.g., ImageNet for images) to learn broad features.

Feature Extraction: The pre-trained model’s layers are used as a fixed feature extractor, with new layers added for the specific task.

Fine-tuning: The entire model or parts of it are adjusted using the target dataset to adapt to the new task.

Key benefits include:

Reduced training time and computational resources compared to training from scratch.

Improved accuracy, especially for tasks with small datasets.

Enhanced generalization by building on robust, learned representations.

Applications span various fields:

Computer Vision: Adapting models for object detection in medical imaging or autonomous vehicles.

Natural Language Processing: Fine-tuning models like BERT for sentiment analysis or question-answering.

Healthcare and Finance: Using pre-trained models for personalized predictions or fraud detection.

Challenges may arise if the source and target tasks are too dissimilar, potentially leading to negative transfer. Despite this, transfer learning has become a cornerstone of modern AI, enabling rapid innovation with existing models.

Table of contents

- Part 1: Create a transfer learning quiz in minutes using AI with OnlineExamMaker

- Part 2: 20 transfer learning quiz questions & answers

- Part 3: AI Question Generator – Automatically create questions for your next assessment

Part 1: Create a transfer learning quiz in minutes using AI with OnlineExamMaker

Are you looking for an online assessment to test the transfer learning knowledge of your learners? OnlineExamMaker uses artificial intelligence to help quiz organizers to create, manage, and analyze exams or tests automatically. Apart from AI features, OnlineExamMaker advanced security features such as full-screen lockdown browser, online webcam proctoring, and face ID recognition.

Recommended features for you:

● Includes a safe exam browser (lockdown mode), webcam and screen recording, live monitoring, and chat oversight to prevent cheating.

● Enhances assessments with interactive experience by embedding video, audio, image into quizzes and multimedia feedback.

● Once the exam ends, the exam scores, question reports, ranking and other analytics data can be exported to your device in Excel file format.

● Offers question analysis to evaluate question performance and reliability, helping instructors optimize their training plan.

Automatically generate questions using AI

Part 2: 20 transfer learning quiz questions & answers

or

1. Question: What is transfer learning in machine learning?

Options:

A. Training a model from scratch on a new dataset.

B. Using knowledge from a pre-trained model to solve a different but related problem.

C. Combining multiple datasets for a single model.

D. Deleting and retraining a model entirely.

Answer: B

Explanation: Transfer learning leverages features learned from a source task to improve performance on a target task, reducing the need for large amounts of new data and computational resources.

2. Question: Which of the following is a primary advantage of transfer learning?

Options:

A. It requires more data for training.

B. It speeds up training time and improves accuracy on smaller datasets.

C. It only works with unrelated tasks.

D. It eliminates the need for any model adjustments.

Answer: B

Explanation: Transfer learning allows models to build on existing knowledge, making it efficient for tasks with limited data by transferring learned features from a related domain.

3. Question: In transfer learning, what does “fine-tuning” typically involve?

Options:

A. Freezing all layers of a pre-trained model.

B. Adjusting the weights of some layers in a pre-trained model for a new task.

C. Training a completely new model from the start.

D. Removing features from the original model.

Answer: B

Explanation: Fine-tuning updates specific layers of a pre-trained model with new data, allowing the model to adapt while retaining useful general features from the original training.

4. Question: When is transfer learning most effective?

Options:

A. When the source and target tasks are completely unrelated.

B. When the source and target tasks share similar features or domains.

C. When using very large datasets for every task.

D. When avoiding any pre-trained models.

Answer: B

Explanation: Transfer learning works best when the source task’s learned representations are relevant to the target task, enabling better generalization with less data.

5. Question: What is the difference between inductive and transductive transfer learning?

Options:

A. Inductive uses labeled data, while transductive does not.

B. Inductive focuses on unrelated tasks, while transductive uses related ones.

C. There is no difference; they are the same.

D. Inductive requires more computational power.

Answer: A

Explanation: In inductive transfer learning, labeled data from the target domain is used to train the model, whereas transductive transfer learning often works with unlabeled data, adapting features directly.

6. Question: Which pre-trained model is commonly used for transfer learning in computer vision?

Options:

A. BERT

B. ResNet

C. LSTM

D. Decision Trees

Answer: B

Explanation: ResNet, trained on large datasets like ImageNet, provides robust feature extraction that can be transferred to new image-related tasks, enhancing performance.

7. Question: In natural language processing, what is an example of transfer learning?

Options:

A. Training a model solely on a new language dataset.

B. Fine-tuning a BERT model for sentiment analysis.

C. Building a model without any pre-existing data.

D. Using random word embeddings.

Answer: B

Explanation: BERT, pre-trained on vast text corpora, can be fine-tuned for specific NLP tasks like sentiment analysis, transferring linguistic knowledge effectively.

8. Question: Why might transfer learning fail in some cases?

Options:

A. If the source and target domains are too dissimilar.

B. If too much data is available for the new task.

C. If the model is trained from scratch.

D. If no pre-trained models exist.

Answer: A

Explanation: If the features learned in the source domain do not align with the target domain, negative transfer can occur, degrading the model’s performance.

9. Question: What role does domain adaptation play in transfer learning?

Options:

A. It ensures tasks are always unrelated.

B. It adapts a model from one domain to another with minimal labeled data.

C. It deletes the original model’s features.

D. It requires training multiple new models.

Answer: B

Explanation: Domain adaptation techniques in transfer learning help bridge the gap between source and target domains, improving accuracy when distributions differ.

10. Question: In transfer learning, what is feature extraction?

Options:

A. Removing irrelevant features from data.

B. Using the output of a pre-trained model’s layers as input for a new model.

C. Fully retraining all layers.

D. Ignoring pre-trained models entirely.

Answer: B

Explanation: Feature extraction involves taking fixed features from a pre-trained model and using them as a starting point for a new classifier, without altering the original layers.

11. Question: Which scenario is ideal for applying transfer learning?

Options:

A. A task with abundant data and no time constraints.

B. A task with limited data in a related field.

C. A completely novel task with no prior models.

D. Training models in isolation.

Answer: B

Explanation: Transfer learning is particularly useful for tasks with scarce data, where leveraging knowledge from a related pre-trained model accelerates learning.

12. Question: How does transfer learning help in reducing overfitting?

Options:

A. By using more complex models.

B. By initializing with robust features from a large dataset.

C. By ignoring validation data.

D. By training on unrelated tasks.

Answer: B

Explanation: Pre-trained features provide a strong foundation, allowing models to generalize better and reduce overfitting, especially on small datasets.

13. Question: What is a common challenge in transfer learning for reinforcement learning?

Options:

A. Tasks are always identical.

B. Policies from the source task may not directly apply to the target environment.

C. It requires no adaptation.

D. Overabundance of data.

Answer: B

Explanation: In reinforcement learning, transfer learning must account for differences in environments, as direct policy transfer can lead to suboptimal results.

14. Question: Which library is often used for implementing transfer learning in deep learning?

Options:

A. Pandas

B. TensorFlow or PyTorch

C. Excel

D. SQL

Answer: B

Explanation: TensorFlow and PyTorch provide tools for loading pre-trained models and fine-tuning them, making transfer learning straightforward in practice.

15. Question: In transfer learning, what happens during the freezing of layers?

Options:

A. All layers are retrained.

B. Certain layers are kept unchanged while others are updated.

C. The entire model is deleted.

D. New layers are added without changes.

Answer: B

Explanation: Freezing layers preserves learned features from the pre-trained model, allowing only specific parts to be fine-tuned for the new task.

16. Question: Why is transfer learning popular in medical image analysis?

Options:

A. Medical data is always abundant.

B. It allows using models trained on large public datasets for specialized tasks with limited medical data.

C. It avoids any pre-trained models.

D. It focuses on unrelated fields.

Answer: B

Explanation: Transfer learning enables the adaptation of models from general image datasets to medical imaging, overcoming the challenge of scarce annotated data.

17. Question: What is the concept of “negative transfer” in transfer learning?

Options:

A. When transfer improves performance significantly.

B. When knowledge from the source task hinders the target task.

C. When no transfer occurs.

D. When models are trained twice.

Answer: B

Explanation: Negative transfer happens when the source task’s features mislead the model on the target task, resulting in worse performance than training from scratch.

18. Question: How does transfer learning apply to self-driving cars?

Options:

A. By ignoring pre-trained vision models.

B. By transferring knowledge from general object detection models to specific driving scenarios.

C. By using only simulation data.

D. By retraining everything from scratch.

Answer: B

Explanation: Pre-trained models for object detection can be fine-tuned for autonomous driving, adapting to real-world conditions with less data.

19. Question: What factor influences the success of transfer learning?

Options:

A. The source and target tasks being identical.

B. The similarity between the data distributions of source and target domains.

C. Using minimal pre-trained models.

D. Avoiding any fine-tuning.

Answer: B

Explanation: The degree of similarity in data distributions determines how effectively features can be transferred, leading to better outcomes.

20. Question: In transfer learning, what is the purpose of a “bottleneck layer”?

Options:

A. To add more layers to the model.

B. To reduce the dimensionality of features for transfer.

C. To eliminate pre-trained data.

D. To increase computational complexity.

Answer: B

Explanation: The bottleneck layer compresses features in models like autoencoders, making it easier to transfer and adapt learned representations to new tasks.

or

Part 3: AI Question Generator – Automatically create questions for your next assessment

Automatically generate questions using AI