Google BERT, or Bidirectional Encoder Representations from Transformers, is a transformative natural language processing (NLP) model developed by Google and introduced in 2018. It leverages a deep bidirectional transformer architecture to understand the context of words in a sentence by...

Synthetic oil is a lubricant manufactured through chemical processes to create a base oil that offers superior performance compared to conventional mineral oils. Unlike traditional oils derived from crude petroleum, synthetic oils are engineered using precise molecular structures, often from...

Personal Protective Equipment (PPE) encompasses a range of specialized gear designed to shield individuals from workplace hazards, including physical, chemical, biological, and environmental risks. This essential safety gear includes items such as helmets for head protection, gloves for hand safety,...

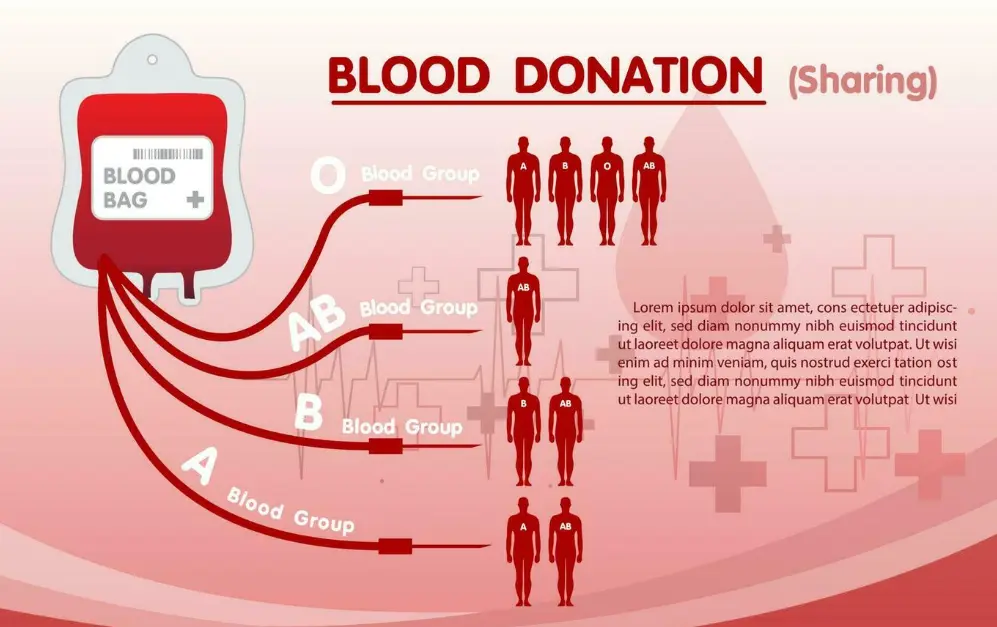

Blood donation safety is a critical aspect of ensuring the health and well-being of both donors and recipients. Before donating, potential donors undergo a thorough screening process, including a review of medical history, vital signs, and blood tests to identify...

Excel is a versatile tool for data analysis, enabling users to transform raw data into actionable insights through built-in features and functions. #Key Features: - Sorting and Filtering: Quickly organize data by arranging rows based on criteria or using filters...

1. FBA Insight Analyzer: An AI-powered dashboard that scans your Amazon seller account data to generate real-time reports on inventory levels, sales trends, and profitability metrics. It uses predictive analytics to forecast demand and suggest restock timings. 2. FBA Compliance...

WhatsApp is a leading cross-platform messaging app developed by WhatsApp Inc., now owned by Meta Platforms. Launched in 2009, it revolutionized communication by allowing users to send text messages, make voice and video calls, share photos, videos, documents, and location...

Mathematical Psychology is an interdisciplinary field that applies mathematical models and quantitative methods to study psychological processes, bridging the gap between empirical psychology and formal sciences like mathematics and statistics. The field emerged in the mid-20th century, influenced by pioneers...

Sharia Law, also known as Islamic Law, is a comprehensive legal and moral framework derived from the core sources of Islam: the Quran, the Sunnah (teachings and practices of the Prophet Muhammad), ijma (consensus of scholars), and qiyas (analogical reasoning)....

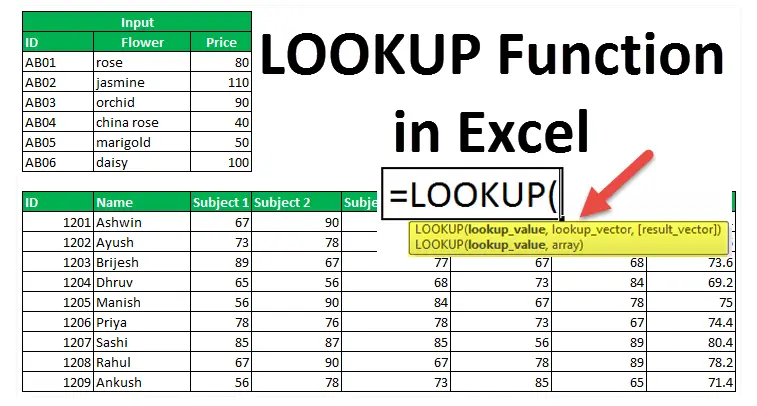

Lookup functions in Excel are essential tools for searching and retrieving data from tables or ranges, enabling efficient data analysis and manipulation. ## Key Lookup Functions 1. VLOOKUP - Purpose: Searches for a value in the first column of a...