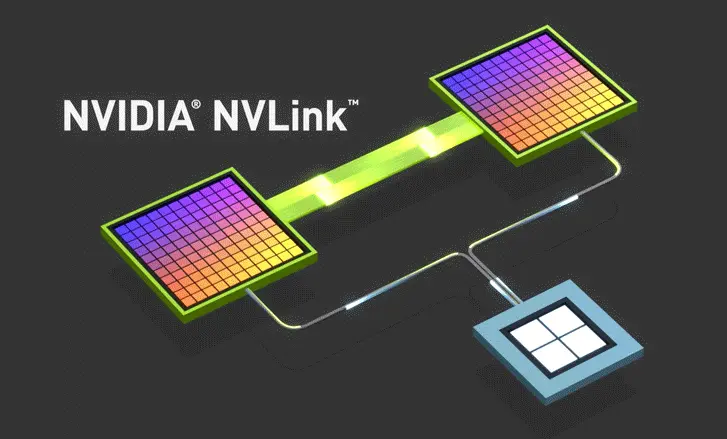

Nvidia NVLink is a high-speed interconnect technology designed to enhance communication between GPUs and other components in computing systems. It enables direct, peer-to-peer data transfer, bypassing traditional CPU bottlenecks for faster processing.

Key Features:

– High Bandwidth and Low Latency: NVLink offers bandwidth up to 900 GB/s in its latest versions, allowing seamless data exchange between multiple GPUs.

– Scalability: It supports multi-GPU configurations, making it ideal for scaling applications in AI, machine learning, and high-performance computing (HPC).

– Integration: NVLink is built into Nvidia’s data center GPUs, such as those in the HGX platform, and works with NVSwitch for even larger interconnect fabrics.

– Versions and Evolution: Starting from NVLink 1.0 (introduced with Pascal architecture), it has progressed to NVLink 4.0, with each iteration increasing speed and efficiency.

Benefits:

– Accelerated Workloads: Reduces data transfer times, improving performance in tasks like deep learning training and scientific simulations.

– Energy Efficiency: Optimizes power usage by minimizing unnecessary data movement.

– Ecosystem Support: Complements Nvidia’s CUDA platform, enabling developers to write efficient code for parallel computing.

Applications:

– In AI and ML, NVLink powers faster model training on systems like DGX A100.

– For HPC, it’s used in supercomputers for complex simulations, such as weather modeling or drug discovery.

– In gaming and graphics, it enhances real-time rendering in professional workstations.

NVLink represents a cornerstone of Nvidia’s strategy for advanced computing, driving innovation in data-intensive fields by fostering tighter integration between hardware components.

Table of Contents

- Part 1: Create An Amazing Nvidia NVLink Quiz Using AI Instantly in OnlineExamMaker

- Part 2: 20 Nvidia NVLink Quiz Questions & Answers

- Part 3: OnlineExamMaker AI Question Generator: Generate Questions for Any Topic

Part 1: Create An Amazing Nvidia NVLink Quiz Using AI Instantly in OnlineExamMaker

Nowadays more and more people create Nvidia NVLink quizzes using AI technologies, OnlineExamMaker a powerful AI-based quiz making tool that can save you time and efforts. The software makes it simple to design and launch interactive quizzes, assessments, and surveys. With the Question Editor, you can create multiple-choice, open-ended, matching, sequencing and many other types of questions for your tests, exams and inventories. You are allowed to enhance quizzes with multimedia elements like images, audio, and video to make them more interactive and visually appealing.

Recommended features for you:

● Prevent cheating by randomizing questions or changing the order of questions, so learners don’t get the same set of questions each time.

● Automatically generates detailed reports—individual scores, question report, and group performance.

● Simply copy a few lines of codes, and add them to a web page, you can present your online quiz in your website, blog, or landing page.

● Offers question analysis to evaluate question performance and reliability, helping instructors optimize their training plan.

Automatically generate questions using AI

Part 2: 20 Nvidia NVLink Quiz Questions & Answers

or

Question 1:

What is the primary purpose of Nvidia NVLink?

A) To connect storage devices

B) To enable high-speed communication between GPUs and CPUs

C) To manage power consumption in servers

D) To handle network traffic

Answer: B

Explanation: NVLink is designed to provide a high-bandwidth, low-latency interconnect for direct GPU-to-GPU and GPU-to-CPU communication, which is essential for accelerating workloads in AI, HPC, and data centers.

Question 2:

Which generation of NVLink supports up to 600 GB/s bidirectional bandwidth per link?

A) NVLink 1.0

B) NVLink 2.0

C) NVLink 3.0

D) NVLink 4.0

Answer: C

Explanation: NVLink 3.0, introduced with the Ampere architecture, offers up to 600 GB/s bidirectional bandwidth per link, enabling faster data transfer for complex computing tasks.

Question 3:

NVLink is most commonly used in which type of systems?

A) Consumer gaming PCs

B) Enterprise servers for web hosting

C) AI and high-performance computing clusters

D) Mobile devices

Answer: C

Explanation: NVLink is optimized for AI training, scientific simulations, and HPC environments where multiple GPUs need to work together seamlessly.

Question 4:

How does NVLink differ from traditional PCIe in terms of data transfer?

A) PCIe is faster for long-distance connections

B) NVLink provides higher bandwidth and lower latency for GPU interconnects

C) They are essentially the same technology

D) PCIe supports more devices per system

Answer: B

Explanation: NVLink offers significantly higher bandwidth (up to 900 GB/s in later generations) and reduced latency compared to PCIe, making it ideal for inter-GPU communication.

Question 5:

What is the maximum number of GPUs that can be connected via NVLink in a single DGX A100 system?

A) 2

B) 8

C) 16

D) 32

Answer: B

Explanation: In a DGX A100 system, up to 8 GPUs can be interconnected using NVLink, allowing for efficient scaling of compute resources.

Question 6:

Which Nvidia architecture introduced NVLink 2.0?

A) Pascal

B) Volta

C) Turing

D) Ampere

Answer: B

Explanation: NVLink 2.0 was first introduced with the Volta architecture, supporting up to 300 GB/s bidirectional bandwidth to enhance multi-GPU performance.

Question 7:

What type of signaling does NVLink use?

A) Serial signaling

B) Parallel signaling

C) Optical signaling

D) Wireless signaling

Answer: A

Explanation: NVLink uses high-speed serial signaling over copper connections, which allows for reliable and fast data transfer between components.

Question 8:

In NVLink, what does the term “link” refer to?

A) A physical cable

B) A software protocol

C) A pair of unidirectional connections

D) A memory buffer

Answer: C

Explanation: An NVLink link consists of a pair of unidirectional connections, enabling full-duplex communication between devices.

Question 9:

Which of the following benefits does NVLink provide for AI workloads?

A) Reduced energy efficiency

B) Faster model training through direct GPU communication

C) Limited scalability

D) Increased latency

Answer: B

Explanation: NVLink allows GPUs to share data directly without going through the CPU or slower interconnects, speeding up AI model training and inference.

Question 10:

What is the typical connector type used for NVLink in servers?

A) USB-C

B) HDMI

C) Proprietary high-speed connectors

D) Ethernet ports

Answer: C

Explanation: NVLink uses proprietary high-speed connectors designed specifically for Nvidia hardware to ensure optimal performance in data center environments.

Question 11:

NVLink 4.0, introduced with which architecture, supports up to 900 GB/s per link?

A) Turing

B) Ampere

C) Hopper

D) Ada

Answer: C

Explanation: NVLink 4.0 is part of the Hopper architecture, providing up to 900 GB/s bidirectional bandwidth to support advanced AI and simulation workloads.

Question 12:

How does NVLink improve upon NVLink 1.0 in terms of bandwidth?

A) It reduces bandwidth

B) It doubles the bandwidth to 300 GB/s

C) It maintains the same bandwidth

D) It introduces wireless capabilities

Answer: B

Explanation: NVLink 2.0 and later versions increased bandwidth from NVLink 1.0’s 20 GB/s to up to 300 GB/s or more, enhancing performance for multi-GPU setups.

Question 13:

Which component is NOT directly supported by NVLink?

A) GPUs

B) CPUs

C) Solid-state drives

D) NVSwitches

Answer: C

Explanation: NVLink primarily connects GPUs and CPUs, and can work with NVSwitches for scaling, but it does not directly support storage devices like SSDs.

Question 14:

In a multi-GPU setup, what role does NVSwitch play with NVLink?

A) It replaces NVLink entirely

B) It enables full connectivity between multiple GPUs

C) It slows down data transfer

D) It is used for external networking

Answer: B

Explanation: NVSwitch works with NVLink to create a fully connected mesh network among GPUs, allowing any GPU to communicate directly with any other.

Question 15:

What is the latency range typically associated with NVLink?

A) Over 1 millisecond

B) Around 100 nanoseconds

C) 1 second

D) 10 milliseconds

Answer: B

Explanation: NVLink achieves latencies as low as 100 nanoseconds, which is crucial for real-time applications and high-performance computing tasks.

Question 16:

Which Nvidia product line heavily relies on NVLink for its design?

A) GeForce gaming cards

B) Tesla compute cards

C) Shield TV devices

D) Quadro professional cards

Answer: B

Explanation: The Tesla line, used for data centers and AI, incorporates NVLink to connect multiple GPUs for accelerated computing.

Question 17:

NVLink supports which memory coherence model?

A) None, it only handles data transfer

B) Cache coherence for shared memory access

C) Direct memory access only

D) File system coherence

Answer: B

Explanation: NVLink enables cache coherence, allowing multiple GPUs to share a unified memory space without the need for explicit synchronization.

Question 18:

What is the minimum number of lanes required for a basic NVLink connection?

A) 1

B) 4

C) 8

D) 16

Answer: B

Explanation: A standard NVLink connection typically uses 4 lanes, but configurations can vary based on the generation and hardware.

Question 19:

How does NVLink contribute to energy efficiency in data centers?

A) By increasing power usage

B) By reducing the need for data movement over slower links

C) By adding more hardware components

D) By limiting computational power

Answer: B

Explanation: NVLink minimizes data transfer overhead and latency, which leads to faster computations and overall energy savings in large-scale data center operations.

Question 20:

Which standard does NVLink compete with in GPU interconnects?

A) USB 3.0

B) PCIe

C) SATA

D) Thunderbolt

Answer: B

Explanation: NVLink is often compared to PCIe as an alternative for high-speed GPU interconnects, offering superior performance for Nvidia ecosystems.

or

Part 3: OnlineExamMaker AI Question Generator: Generate Questions for Any Topic

Automatically generate questions using AI