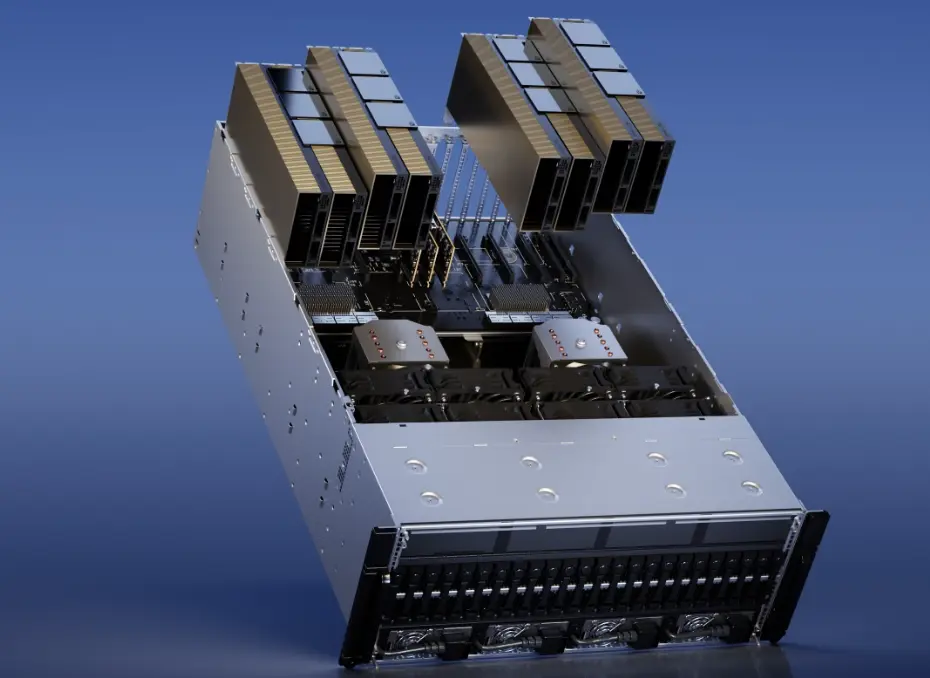

The Nvidia H-Series, led by the H100 GPU, is a cutting-edge lineup designed for accelerated computing in AI, data centers, and high-performance workloads. Built on the Hopper architecture, it delivers exceptional performance through advanced features like:

– Hopper Architecture: Enables up to 60 teraflops of FP64 performance and supports massive parallel processing for AI training and inference.

– High-Bandwidth Memory (HBM3): Provides up to 5TB/s bandwidth, optimizing data-intensive tasks such as large-scale simulations and machine learning.

– Multi-Instance GPU (MIG): Allows partitioning of the GPU for better resource allocation in virtualized environments.

– Energy Efficiency: Offers high performance per watt, reducing operational costs for enterprise deployments.

Key applications include:

– AI and machine learning for training models like large language models.

– High-performance computing (HPC) for scientific research and weather modeling.

– Data center operations for cloud services and edge computing.

– Accelerated graphics and video processing.

The H-Series sets new benchmarks in speed and efficiency, with the H100 providing up to 4x faster AI inference compared to previous generations, making it essential for modern computing demands. For detailed specifications, consult Nvidia’s official resources.

Table of Contents

- Part 1: OnlineExamMaker AI Quiz Generator – The Easiest Way to Make Quizzes Online

- Part 2: 20 Nvidia H-Series Quiz Questions & Answers

- Part 3: OnlineExamMaker AI Question Generator: Generate Questions for Any Topic

Part 1: OnlineExamMaker AI Quiz Generator – The Easiest Way to Make Quizzes Online

Are you looking for an online assessment to test the Nvidia H-Series skills of your learners? OnlineExamMaker uses artificial intelligence to help quiz organizers to create, manage, and analyze exams or tests automatically. Apart from AI features, OnlineExamMaker advanced security features such as full-screen lockdown browser, online webcam proctoring, and face ID recognition.

Take a product tour of OnlineExamMaker:

● Includes a safe exam browser (lockdown mode), webcam and screen recording, live monitoring, and chat oversight to prevent cheating.

● AI Exam Grader for efficiently grading quizzes and assignments, offering inline comments, automatic scoring, and “fudge points” for manual adjustments.

● Embed quizzes on websites, blogs, or share via email, social media (Facebook, Twitter), or direct links.

● Handles large-scale testing (thousands of exams/semester) without internet dependency, backed by cloud infrastructure.

Automatically generate questions using AI

Part 2: 20 Nvidia H-Series Quiz Questions & Answers

or

Question 1: What is the primary architecture used in Nvidia’s H100 GPU?

A) Ampere

B) Turing

C) Hopper

D) Volta

Answer: C) Hopper

Explanation: The H100 GPU is built on Nvidia’s Hopper architecture, which enhances performance for AI workloads through advanced computing capabilities.

Question 2: Which of the following is a key feature of Nvidia H-Series GPUs for AI applications?

A) Ray tracing cores

B) Tensor cores

C) Video encoding units

D) Memory compression

Answer: B) Tensor cores

Explanation: Tensor cores in H-Series GPUs accelerate matrix operations, making them essential for machine learning and AI training tasks.

Question 3: What is the typical memory type used in Nvidia H100 GPUs?

A) GDDR5

B) HBM3

C) DDR4

D) GDDR6X

Answer: B) HBM3

Explanation: H100 GPUs utilize HBM3 memory, which provides high bandwidth and efficiency for data-intensive applications like deep learning.

Question 4: How does the H100 GPU improve upon its predecessor in terms of floating-point performance?

A) It reduces performance by 20%

B) It offers up to 2x the performance

C) It maintains the same level

D) It decreases by 10%

Answer: B) It offers up to 2x the performance

Explanation: The H100 provides up to 2x the floating-point operations per second compared to previous generations, enhancing computational speed for scientific simulations.

Question 5: Which Nvidia H-Series GPU is designed primarily for data center and enterprise use?

A) H100

B) RTX 3060

C) GTX 1080

D) Titan X

Answer: A) H100

Explanation: The H100 is optimized for data centers, focusing on scalability and efficiency in large-scale computing environments.

Question 6: What role do multi-instance GPUs (MIG) play in H-Series GPUs?

A) They enable overclocking

B) They allow partitioning of the GPU for multiple users

C) They improve gaming resolution

D) They reduce power consumption

Answer: B) They allow partitioning of the GPU for multiple users

Explanation: MIG technology in H-Series GPUs divides the GPU into separate instances, enabling efficient resource sharing in multi-tenant environments.

Question 7: Which interconnect technology is commonly associated with Nvidia H100 for scaling in clusters?

A) PCIe 3.0

B) NVLink

C) USB-C

D) Thunderbolt

Answer: B) NVLink

Explanation: NVLink provides high-speed communication between H100 GPUs, allowing for faster data transfer in clustered computing setups.

Question 8: What is the maximum theoretical memory bandwidth of the H100 GPU?

A) 1 TB/s

B) 2 TB/s

C) 3 TB/s

D) 4 TB/s

Answer: C) 3 TB/s

Explanation: The H100 GPU supports up to 3 TB/s of memory bandwidth with HBM3, facilitating rapid data access for AI and HPC tasks.

Question 9: In what way does the H100 GPU support sparse tensor operations?

A) It disables them entirely

B) It accelerates them using specialized hardware

C) It ignores them for efficiency

D) It converts them to dense operations

Answer: B) It accelerates them using specialized hardware

Explanation: H100’s architecture includes hardware acceleration for sparse tensors, improving efficiency in neural network computations.

Question 10: Which application is NOT typically associated with Nvidia H-Series GPUs?

A) Video editing

B) Scientific simulations

C) Large language model training

D) Cryptocurrency mining

Answer: D) Cryptocurrency mining

Explanation: While H100 can be used for mining, it is primarily designed for AI, research, and simulations rather than consumer-focused mining.

Question 11: How does the H100 GPU enhance energy efficiency compared to older models?

A) By increasing power draw

B) By offering better performance per watt

C) By reducing core count

D) By limiting clock speeds

Answer: B) By offering better performance per watt

Explanation: H100 achieves higher energy efficiency through architectural improvements, delivering more computations per unit of power consumed.

Question 12: What is the purpose of the Transformer Engine in H100 GPUs?

A) To handle graphics rendering

B) To optimize large language models

C) To manage system cooling

D) To compress data

Answer: B) To optimize large language models

Explanation: The Transformer Engine in H100 accelerates transformer-based AI models, reducing training time for applications like NLP.

Question 13: Which factor contributes to the H100’s superiority in parallel processing?

A) Single-core design

B) High thread count per SM

C) Limited cache memory

D) Low clock frequency

Answer: B) High thread count per SM

Explanation: H100’s streaming multiprocessors support a high number of threads, enabling superior parallel processing for complex workloads.

Question 14: What type of computing does the H100 GPU excel in?

A) Mobile gaming

B) Accelerated computing

C) Basic office tasks

D) Embedded systems

Answer: B) Accelerated computing

Explanation: H100 is tailored for accelerated computing, including AI, machine learning, and high-performance computing applications.

Question 15: How does the H100 support heterogeneous computing environments?

A) By only working with Nvidia CPUs

B) Through integration with CUDA and other APIs

C) By excluding third-party software

D) By limiting API access

Answer: B) Through integration with CUDA and other APIs

Explanation: H100 uses CUDA and compatible APIs to integrate seamlessly with various processors in heterogeneous setups.

Question 16: Compared to AMD’s MI200 series, what advantage does the H100 offer?

A) Lower price point

B) Better AI inference performance

C) Smaller form factor

D) Reduced memory capacity

Answer: B) Better AI inference performance

Explanation: H100 generally provides superior AI inference speeds due to its optimized architecture and tensor core enhancements.

Question 17: What is the typical TDP (Thermal Design Power) range for Nvidia H100 GPUs?

A) 50-100W

B) 200-400W

C) 700-1000W

D) 10-50W

Answer: C) 700-1000W

Explanation: H100 GPUs have a TDP in the 700-1000W range, reflecting their high-performance design for demanding tasks.

Question 18: Which security feature is included in H100 GPUs for enterprise use?

A) Basic encryption

B) Confidential computing

C) Open-source firmware

D) Wireless connectivity

Answer: B) Confidential computing

Explanation: H100 supports confidential computing, protecting data in use for secure enterprise and cloud environments.

Question 19: How does the H100 GPU handle large-scale model training?

A) By reducing dataset sizes

B) Through scalable memory and compute resources

C) By simplifying algorithms

D) By limiting batch sizes

Answer: B) Through scalable memory and compute resources

Explanation: H100’s extensive memory and multi-GPU support enable efficient training of large-scale models without bottlenecks.

Question 20: What makes the H100 suitable for real-time inference in edge computing?

A) High latency

B) Low power efficiency

C) Fast processing and low latency

D) Limited connectivity

Answer: C) Fast processing and low latency

Explanation: H100’s architecture ensures fast processing and minimal latency, making it ideal for real-time inference in edge computing scenarios.

or

Part 3: OnlineExamMaker AI Question Generator: Generate Questions for Any Topic

Automatically generate questions using AI